ECE 4900 Capstone: Autonomous Mapping UGV

Project Overview

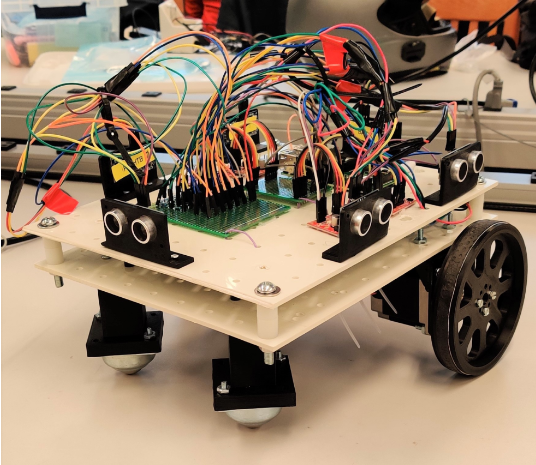

This senior capstone project addressed the need for Unmanned Ground Vehicles (UGVs) capable of autonomously navigating hazardous or unknown environments. Our team designed and built an autonomous robot that could map indoor spaces, detect obstacles in real-time using ultrasonic sensors, and calculate optimal escape routes using advanced pathfinding algorithms.

Problem Statement: In emergency scenarios (search and rescue, building inspections, hazardous material incidents), first responders need situational awareness before entering dangerous areas. Our UGV provides autonomous mapping and pathfinding to help personnel understand building layouts and identify optimal routes.

System Design

Hardware Components

Microcontroller & Compute:

- Raspberry Pi 4 (primary controller for navigation and mapping algorithms)

- 24 GPIO pins for sensor and motor driver interfaces

Motors & Drive System:

- 2x Wantai NEMA 23 stepper motors (rear-wheel drive)

- 4x SparkFun Big Easy motor drivers (A4988-based)

- 2x 3D-printed castor wheels (front)

- 2x 3D-printed 4.5" drive wheels with rubber tape for traction

Sensors:

- 7x HC-SR04 ultrasonic sensors (range: 4cm - 4m)

- Mounted at front, sides, back, and underneath for obstacle detection

Power System:

- 12V power bank for stepper motors

- 5V USB power bank for Raspberry Pi

- Independent power supplies to prevent motor noise interference

Chassis:

- 2-tier polypropylene pegboard (1/8" thick, custom drilled)

- Dimensions: ~22" x 18" footprint

- 3D-printed sensor mounts and motor brackets

Software Architecture

Programming Language: Python 3.x

Development Environment: Raspberry Pi OS, SSH remote development

Key Algorithms:

- A* (A-Star) Pathfinding: Real-time optimal path calculation combining actual cost (G) and heuristic estimated cost (H)

- Dynamic A*: Continuously updates map as new obstacles detected

- Motion Planning: 4-direction grid-based movement (forward, left, right, U-turn)

Control Flow:

- Ultrasonic sensors trigger and measure distances

- Obstacle detection updates internal map representation

- A* algorithm calculates optimal path to exit/goal

- Motor control translates path into step commands

- Real-time position tracking and map updates

Key Modules

Astar.py- Pathfinding algorithm implementationSTEPPER.py- Motor control with direction and step logicUltrasonic.py- Sensor reading with averaging filterGetObstacle.py- Obstacle detection and map updatingMove.py- Coordinate motion commands with direction vectors

Implementation

Circuit Design

Started with breadboard testing of individual sensors and motors. Each HC-SR04 sensor was tested for accuracy and interference patterns. Motor drivers were bench-tested with single motors before integration.

PCB & Wiring: Custom prototype boards for sensor voltage dividers (5V → 3.3V for Raspberry Pi GPIO protection). All motor drivers shared common ground with Raspberry Pi. Extensive wire management through chassis drill-holes to prevent tangling during operation.

Firmware/Software Development

Object-oriented Python with separate modules for hardware interfaces (sensors, motors) and algorithms (pathfinding, mapping). Used NumPy for efficient matrix operations in pathfinding.

Key Challenges:

- Timing Synchronization: Ultrasonic sensors can interfere if triggered simultaneously. Implemented sequential triggering with 1ms delays.

- Stepper Motor Coordination: Initially used 4-wheel drive, but weight and power draw caused navigation failures. Switched to 2-wheel rear drive with front casters, reducing complexity and improving reliability.

- Coordinate System Mapping: Implemented transformation matrices to convert sensor readings from robot-relative coordinates to map-absolute coordinates based on heading.

Memory Management: Raspberry Pi 4's 4GB RAM was more than sufficient. Primary constraint was computation time for pathfinding in large maps (optimized to <5 minutes per path calculation).

Testing & Debugging

Testing Methodology:

- Unit Testing: Each sensor tested individually at set distances (5cm - 300cm) to establish accuracy (±5-15cm acceptable error)

- Integration Testing: Motor + sensor synchronization tested in controlled hallway environments

- Algorithm Validation: Pathfinding tested in simulation before hardware deployment

Debug Tools:

- SSH terminal logging for real-time algorithm state

- LED indicators for system status (searching, moving, obstacle detected)

- Matplotlib for visualizing generated maps post-run

Major Issues & Solutions

- Issue: Robot drifted during rotation due to wheel slippage on smooth floors — Solution: Added rubber tape to wheels for friction, calibrated rotation step counts (130 steps per 90° turn)

- Issue: Ultrasonic sensors gave erratic readings when too close together (<10cm spacing) — Solution: Repositioned sensors with minimum 10cm separation, applied sliding average filter (10 samples)

- Issue: Four-wheel drive caused excessive current draw and motor stalling — Solution: Redesigned to two-wheel drive, reducing power consumption and mechanical complexity

Technical Challenges

Signal Noise & Filtering

Ultrasonic sensors produced noisy distance readings, especially near reflective surfaces. Implemented a sliding average filter (10-sample window) to smooth readings and reduce false obstacle detection.

Timing & Synchronization

Coordinating stepper motor steps between left and right motors required precise timing. Used Python's time.sleep() with 4.5ms step delays to ensure smooth, synchronized motion.

Power Consumption

Initial 4-motor design drained batteries rapidly (<30 minutes runtime). Switching to 2-motor drive extended battery life to meet 60-minute specification.

Hardware/Software Integration

Translating algorithmic path (grid coordinates) into motor commands (step counts and directions) required careful calibration. Developed transformation matrices to handle rotation and coordinate system conversions.

Results & Performance

The UGV successfully navigated a demonstration course and demonstrated all required capabilities: autonomous mapping, obstacle detection and avoidance, and path planning using the A* family of algorithms. The system met the project's functional requirements and performed reliably during testing runs.

Project Documents

Skills Demonstrated

- Python Programming: Object-oriented design, NumPy for matrix operations, hardware interfacing with RPi.GPIO

- Robotics & Kinematics: Stepper motor control, coordinate transformations, odometry

- Algorithm Design: A* pathfinding, graph search, heuristic optimization

- Embedded Systems: Raspberry Pi GPIO, sensor integration, real-time control loops

- Hardware Design: Motor driver circuits, voltage regulation, mechanical assembly

- Systems Engineering: Requirements analysis, design iteration, integration testing

- Technical Communication: Design reports, presentations, engineering documentation

- Team Collaboration: 6-person team with divided responsibilities (hardware, navigation, mapping)

Lessons Learned & Future Work

Key Takeaways:

- Simplicity often beats complexity: switching from 4-wheel to 2-wheel drive dramatically improved reliability

- Algorithm optimization (A*) can provide both speed and optimality when properly tuned

- Robust testing (unit → integration → system) catches issues early

Potential Improvements:

- Upgrade to LIDAR for more accurate 2D mapping (ultrasonic has limited angular resolution)

- Implement SLAM (Simultaneous Localization and Mapping) for larger, more complex environments

- Add wireless telemetry for real-time map visualization on remote display

- Enclosed chassis with transparent dome for better aesthetics and cable management

Professor: Haskell Jac Fought • Course: ECE 4900 Capstone Design II

Institution: The Ohio State University, Department of Electrical and Computer Engineering

This project fulfilled the requirements for Ohio State's ECE capstone sequence, demonstrating proficiency in embedded systems design, algorithm development, and interdisciplinary engineering problem-solving.